Statistical hypothesis testing: minimize bias and help ensure your results are significant

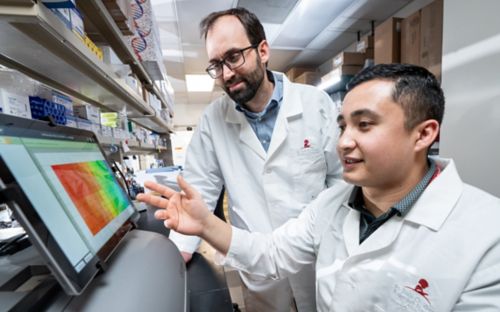

In addition to reviewing Richard Harris’ Rigor Mortis, Charles Rock, PhD, and Jiangwei Yao, PhD, address scientific methods and processes that improve scientific processes and more meaningful results.

There are three kinds of lies: lies, damned lies, and statistics.

- Benjamin Disraeli (British Prime Minister 1874–1880)

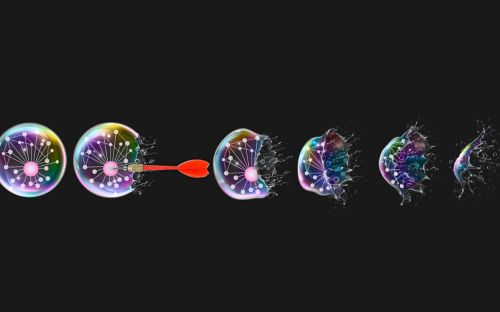

Statistical hypothesis testing provides an objective standard to determine if a result is significant. In other words, is what just happened really what happened? When used properly, it’s a powerful tool for minimizing confirmation bias.

The 4 steps to statistical hypothesis testing

First, formulate the null and alternative hypotheses. The null hypothesis is the one you intend to reject. An alternative hypothesis will rival the null hypothesis. This way, you have two sets of data to compare.

Second, choose the appropriate statistical test and the statistical significance level for hypothesis testing.

Third, collect the data (observe the phenotype with and without drug) and perform the statistical test on the data.

Fourth, either accept or reject the null hypothesis based on whether the data exceeds the predetermined significance level using a predetermined statistical test.

Two common traps of statistical hypothesis testing

While these steps appear quite straightforward, there are two common traps that investigators must avoid.

The most egregious statistical malpractice is p-hacking.

Also called data dredging, or data fishing, p-hacking is the inappropriate use of statistical hypothesis testing to show something is true when it actually isn’t. Here, “p” refers to the ratio between observed and expected outcomes. p-Hacking includes excluding data that disfavors the hypothesis or repeating experiments until one finally passes the significance test.

Backward rationalizations such as “the experiment wasn’t done properly,” without specifying what exactly wasn’t done correctly, or “that data point is an outlier” without evidence is too often used to justify data exclusion.

Reporting statistical significance in data without forming the hypothesis beforehand is another problem.

It is important to understand that a proportion of data within large biological datasets will always appear statistically significant at the 95% level, even when they are not.

Reporting these differences as significant without forming a hypothesis is exploratory data analysis and not statistical hypothesis testing. In other words, your results are misleading when you go through data to find another result and change your hypothesis — it’s fine to report the findings as a surprise, it’s another thing entirely to cast them as something that was expected.

Statistical significance in exploratory analysis of large datasets must be much more stringent to reduce the background level of false significance.

The result of exploratory data analysis should be the formulation of a hypothesis that undergoes separate statistical hypothesis testing in a well-designed experiment.

Most biological experiments use a confidence level of 95%, and the scientific community is becoming increasingly aware of the limitations to this threshold.

If all scientific papers use the 95% threshold, this means that 5% reported conclusions that are not correct.

In 2009, there were about 850,000 papers recorded in PubMed.

If there were an average of 10 experimental comparisons per paper and all used the 95% rule, this would mean that potentially 425,000 false-positive results were reported in 2009.

Perhaps the real number of false positives is not this high, but the widespread adoption of the 95% confidence standard definitely contributes to the ‘reproducibility crisis’ in biomedical research.

There is mounting pressure on journals and granting agencies to raise the acceptable significance level in life science research from p < 0.05 to p < 0.005.

Adopt tighter statistical standards to avoid the reproducibility trap and maximize the impact of your scientific career.

Jiangwei Yao, PhD, contributed to this post.